How denormalization works in a column data store

Denormalized data models provide increased performance and ease of use. Most documentation about denormalization is taken from the view of the row-based data store, not the column-based data store. Row-based data store environments may encounter negative aspects when using denormalization, such as

– Using more disk space

– Being required to maintain the same data in more than one table

– More expensive secondary processing to create the denormalized layout

– Running the risk that the data can get “out of sync”

– Index bloating

Row-based database vendors also suggest having the normalized or third-normal form first, which means having to maintain two different data models. Normalization in a relational database is a suggested approach to structuring information in order to avoid redundancy and inconsistency, and to promote efficient maintenance, storage, and updating. Why do they feel this way? Let’s look at a simple data model with one dimension and one fact table.

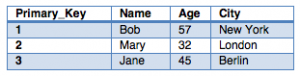

###Customers Table

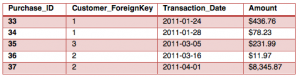

###Purchases Table

By keeping the customer information data separated, its table will not require updating as frequently, since customer demographics do not change very often and new customers do not appear as often as purchases. As well, as new purchases are entered, less data needs to be managed in the database. There is also additional ID information (or Keys) to link the two tables and apply referential integrity. Now let’s see what it looks like from a denormalized standpoint.

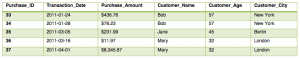

###Denormalized Table

As you can see, the customer information is repeated multiple times. If there were millions of transactions, this could add up to a lot of redundant data. However, column-based data stores get around this issue and most of the other complaints made from the row-based alternatives.

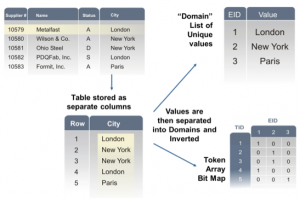

###Column-based, tokenized, bit-array encoded

First, by storing only the columns they do not store redundant data. This also means that column-based stores should not encounter significant index bloating as the bitmap vectors will compress at higher percentages as the data redundancy increases. Second, the reduced data volumes improve I/O operations such as inserts and updates. As well, if an insert or update into a field already contains that value, then only the associated mapping requires updating. As you can see above, the same information is stored in both forms, and so with proper field-naming conventions, there is no immediate need for a normalized model in an analytical database. In addition, extra key fields that are created primarily to improve join conditions can be removed. Of course, the most important performance gain is on the query side. The primary performance gain is the removal of expensive joins. End users can now look at a single wide list of fields and choose the ones they need, while the database only has to deal with the columns mentioned in the query and not all of the fields contained in every table mentioned in the query.

###Denomalized tables are not aggregate or summary tables

On a side note, denormalization is often confused with aggregate or summary tables. Denormalization is the merging of the data from multiple tables into a single wide view of the fields. Aggregate tables may still be used to improve performance of frequently executed queries, such as summing up the Purchase_Amount by customer. Aggregation or summary tables may be covered in a later blog.