Column-oriented Part 1: The I/O Advantage

Column-oriented Database Management Systems (CDBMS), also referred to as columnar databases and CBAT, have been getting a lot of attention recently in the data warehouse marketplace and trade press. Interestingly, some of the newer companies offering CDBMS-based products give the impression that this is a entirely new development in the RDBMS arena. I don’t know where they have been for the last 10 years! This technology has actually been around for quite a while, and at SAND we have been working with it since 1987. But the market has only recently started to recognize the many benefits of CDBMS. So, why is CDBMS now coming to be recognized as the technology that offers the best support for very large, complex data warehouses intended to support ad hoc analytics? In my opinion, one of the fundamental reasons is the reduction in I/O workload that it enables.

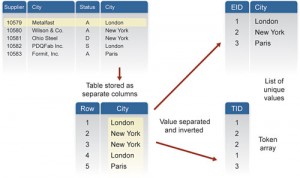

The main problem with traditional row-oriented RDBMS technology is that the size of the rows in a table directly affects the performance of any SQL request targeting that table. In reality, however, the database object addressed by analytic queries is the column. In a row-oriented database, every column is locked in its place within the row (also called a record), and each time the RDBMS needs to access a specific column, the full row must be accessed. In other words, the level of granularity of I/O operations is the record.

Let’s look at an example to illustrate the negative impact of this approach: imagine a table with 1 million rows, each having a total size of 1024 bytes. Each row is composed of 128 columns, one of which holds telephone numbers that are 10 bytes long. A query is executed to extract all the phone numbers starting with the area code 514 (constituting about 10 percent of the records).

Using a traditional RDBMS with no indexing on the specified column, a full table scan will be required to satisfy this request: this means 1 GB of data (1,000,000 x 1000 bytes) will have to be read from storage. If an index has been defined on the column, then only 100 MB of data will have to be read.

With a CDBMS database, on the other hand, the record has been decomposed into its constituent columns, so the level of granularity of I/O operations is the column. When individual columns are targeted to respond to the same query, the worst-case scenario is that 10 MB of data will have to be read from storage.

SAND CDBMS products use columnar decomposition as described above. They also implement a tokenization process that might be described as de-duplication at the level of column values. I will discuss this technique in another blog post. Finally, advanced compression algorithms are applied to the data structures, frequently achieving compression ratios of 90% or more. This again reduces the amount of data read from storage during query execution. For the example we are using here, this would translate to just 1MB of data transferred, representing a 1000X – 100X reduction compared to a traditional row-oriented RDBMS.

The I/O workload reduction resulting from the use of CDBMS technology can be very significant, especially these days when processor speeds are improving consistently but I/O operational speeds are increasing very slowly.

With today’s very large databases, it is not unusual to see fact tables that have many billions of records. With a traditional row-oriented RDBMS, adding a single character per row in a billion-row table would automatically add 1 GB of I/O operations. For this reason, data modeling is a much more complex and limiting task when a row-oriented RDBMS is involved. This data modeling issue will be the topic of my next post from the bus.

Richard Grondin