Nearline 2.0 and the Data Management Framework

In my last post, I outlined some of the advanced data modeling options that have been made possible by the advent of Nearline 2.0. Today, I want to discuss how Nearline 2.0 can act as an essential component in a data management framework. The data management framework, which can be viewed as an extension of the data model concept to the level of enterprise data architecture, governs the processing and management of enterprise data throughout its “lifecycle”, from creation to disposal. It includes all operational components, and covers key issues such as data backup, disaster recovery, data retention, data access security and so on.

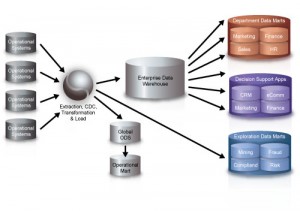

Let’s start at the beginning of the process. In a traditional data warehouse implementation, data is extracted from operational systems, then transformed and loaded into the data warehouse. Figure 1 shows this process, usually called ETL (Extract, Transform and Load) or sometimes ELT (Extract, Load and Transform), in the context of the Corporate Information Factory.

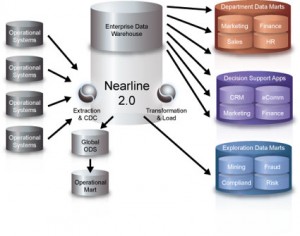

In previous posts, I presented Nearline 2.0 as a “database extension” that can reduce TCO, guarantee satisfaction of SLAs, and improve the performance of the online database that underpins the data warehouse solution. This configuration is based on the traditional concept of Information Lifecycle Management (ILM), normally implemented as a tactical effort to protect the enterprise against problems associated with the “data tsunami”. Figure 2 illustrates the incorporation of Nearline 2.0 into the data management framework as a database extension used to store (and maintain access to) older or less frequently used detail data.

While this ILM concept is a sound one, it has important limitations because of its reliance on migrating data from the online database to the nearline repository, with all the technical and human resource requirements this entails. Is it really necessary to do it this way? In my last post, I introduced the notion of Just-in-Case (JIC) data, which involves maintaining access to the detail data that underlies data warehouse summary tables. If the JIC data (which is static by definition) is “nearlined” at creation time — or as as soon as possible after it is created, — we can avoid the costs and system impact of moving it into the data warehouse. Why should we migrate JIC data to the online data warehouse, only to face the additional costs and hassles of archiving it later on? This will be a vital consideration when strategic decisions are being made about the enterprise data management framework.

It clearly makes much more sense for the data to be stored directly in the Nearline 2.0 repository. At SAND, we call this type of architecture, which is only possible because of the availability of Nearline 2.0 technology, the Corporate Information Memory (CIM). This is illustrated in figure 3.

The key benefit of this architecture for any enterprise is that it is based on an evolution of the current architecture, and so protects existing investments in people skills, software and equipment. This fits perfectly with Gartner’s observations in a recent report on “Key Issues for Delivering a Data Warehouse Project, 2008”:

“The big theme for data warehousing in 2008 is the increased demand for more data, in more places and doing so with an evolutionary approach. Data warehouses are mission-critical and integrated into operations. Failure to support business process change and inflexibility will not be tolerated. The problem is how to take the years of effort and funds invested previously and leverage them into a modern data warehouse because “rip and replace” strategies are not acceptable.”

So, it is not difficult to see how Nearline 2.0 can act as a pivotal element in an enterprise data management framework, and how it presents one of the best available solutions to the critical issues that have arisen in this area. In my next post, I will discuss the benefits of this type of architecture in more detail.

Richard Grondin