Column-oriented Part 2: Flexible Data Modeling for a Simplified End User Experience

In my last blog post, I explained how Column-oriented Database Management Systems (CDBMS), also known as columnar databases or CBAT, offer a distinct advantage over the traditional row-oriented RDBMS in terms of I/O workload, deriving primarily from basing the granularity of I/O operations on the column rather than the entire row. This technological advantage has a direct impact on the complexity of data modeling tasks and on the end-user’s experience of the data warehouse, and this is what I will discuss in today’s post.

When designing the data model in a standard row-oriented RDBMS, the I/O sub-system is a major concern. Tables need to be designed to reduce the row weight (or average row size). With this constraint in mind, a data architect will tend to normalize the data model as much as possible, and/or use a traditional star or snowflake schema. The complexity of the resulting data model will directly affect the ability of end users to execute ad hoc requests on the data. Often, the data model is so complex that business users will not be able to figure out how to design their queries – obviously compromising the ability of the system to transform enterprise data into enterprise knowledge. For this reason, techniques have been developed to simplify data access, typically involving summary tables, materialized views or cubes.

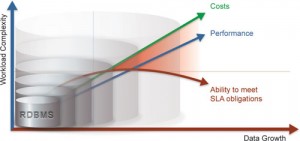

Another technical problem that is encountered when designing data models for a traditional row-oriented RDBMS relates to the issue of slowly-changing dimensions. Very often, these will necessitate complex join operations between the fact table and the dimension tables, based on time periods and other keys. This too can make it very difficult for business users to navigate the corporate data: how can they be sure that joins will always be clearly defined and produce the right results? Often, the larger the database, the more complex the data model will be, and the more difficult it will be to maintain good performance. Frequently, business users will be limited to simple queries that don’t produce much real business value, and will ultimately come away with a poor overall experience of the data warehouse.

A CDBMS database, on the other hand, offers extreme flexibility in data modeling, mainly because row weight is no longer an issue. The number of columns in a table is not really important when a query is executed, because only the columns referenced in the query will be read into memory. How does this affect data model design? The first advantage is that it enables data denormalization, which reduces data model complexity. What business user doesn’t understand an Excel worksheet? This “flat” view of the data is precisely what data denormalization can bring. Denormalization can be full or partial depending on the aspect of the data model that needs to be simplified.

Another advantage of CDBMS in data modeling is the reduced complexity of managing slowly changing dimension tables. Attributes associated with slowly changing dimensions can be directly integrated into the fact table at the time the rows are inserted, so the attributes will be in synch and the number of join operations will be reduced. If required, a dimension table can also be used to enable data analysis based on either current or past dimension settings. For example, after restructuring a the structure of a product family, it will be possible to execute sales analyses comparing the historical product family structure with the new one.

When the data model is simplified, DBA tasks are simplified as well, since there are less joins to define and manage. Depending on the nature of the CBAT implementation, another advantage for the DBA is a reduction of the costs associated with data model change. The column decomposition concept makes it is easy to add or remove a column of a given table or to change its size. These kinds of change can be applied without having to offload/reload data or reorganize a table, often very expensive processes to execute.

Removing the constraints on I/O sub-system performance from the data modeling phase enables a better end-user experience, and should improve the quality of knowledge within the enterprise. End-users will be able to execute ad hoc queries more easily to discover new patterns in the data, resulting in better business intelligence. The DBA’s job will also become less complex: instead of tuning the data model for performance, they will be in a position to tune the data model to enable easy access. This advantage directly translates into business value, and improves the overall return on investment in the data warehouse. While this might require implementation of a more complex ETL process, if the result is delivery of real business value and business user empowerment, the trade-off will normally be considered worthwhile.

The ability to react more quickly to any market change: isn’t this what any enterprise would wish to make possible with their data warehouse? The CDBMS approach is great way to achieve this goal.

Richard Grondin